Pets

My employer has always lived on the cloud. We started running on Google App Engine, and for the last decade, the platform has served us well. However, some of our complex workloads required more compute power than App Engine (standard runtime) is willing to provide, so it wasn’t long before we had some static servers in EC2. These were our first ‘pets’. What is a ‘pet’ server?

In the old way of doing things, we treat our servers like pets, for example Bob the mail server. If Bob goes down, it’s all hands on deck. The CEO can’t get his email and it’s the end of the world.

– Randy Bias and Bill Baker

If you were a sysadmin any time from 1776-2012, this was your life. At my previous employer, we even gave our servers hostnames that were the last names of famous scientists and mathematicians. Intentional or not, you get attached, and sometimes little fiefdoms even arise (“Oh, DEATHSTAR is Steve’s server, and Steve does not want anyone else touching that!”).

Cattle, not pets

In the new way, servers are numbered, like cattle in a herd. For example, www001 to www100. When one server goes down, it’s taken out back, shot, and replaced on the line.

– Randy Bias and Bill Baker

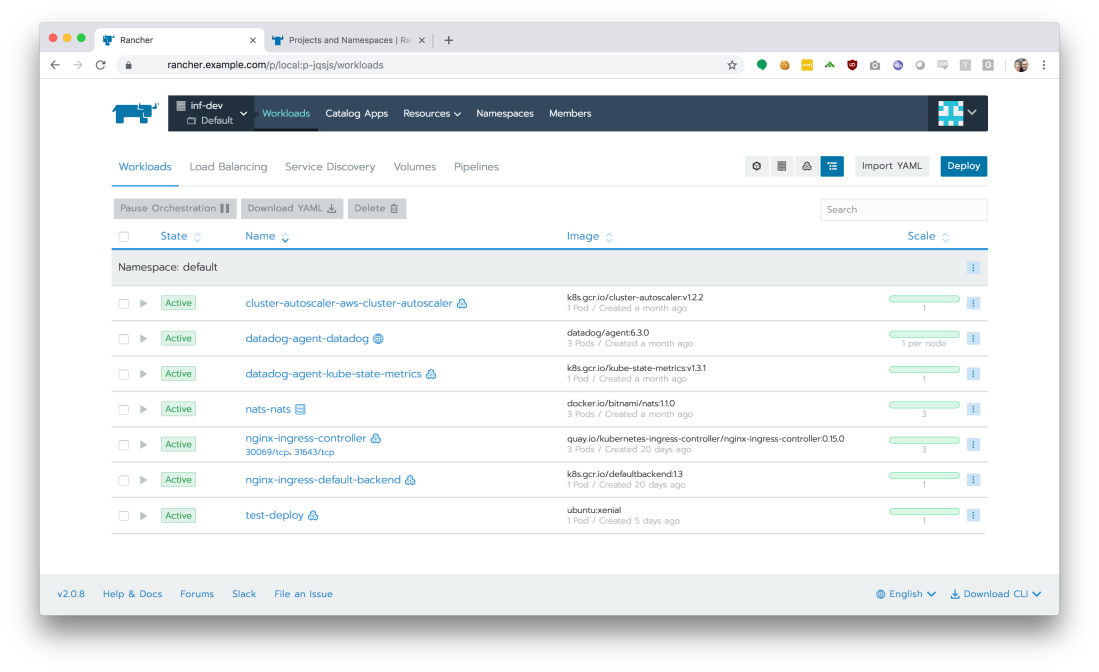

As we grew, it became obvious that we needed a platform which would allow us to perform long-running jobs, complex computations, and maintain a higher degree of control over our infrastructure. We started the dive off of Google App Engine and are now invested in Kubernetes, specifically using the AWS Elastic Kubernetes Service (EKS). Those static servers in EC2 that I mentioned are also coming along for the ride, with the last few actively undergoing the conversion to running as containers. Soon, every production workload we have will exist solely as a Docker container, run as a precisely-managed herd.

Running a container platform

Kubernetes isn’t necessarily trivial to run. Early on, we realized that using AWS Elastic Kubernetes Service (EKS) was going to be the quickest way to a production-ready deployment of Kubernetes.

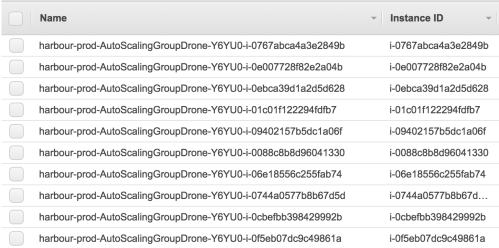

In EKS, we are only responsible for running the Nodes (workers). The control plane is entirely managed by AWS, abstracted away from our view. The workers are part of a cluster of systems which are scaled based on resource utilization. Other than the containers running on them, every single worker is identical to the others.

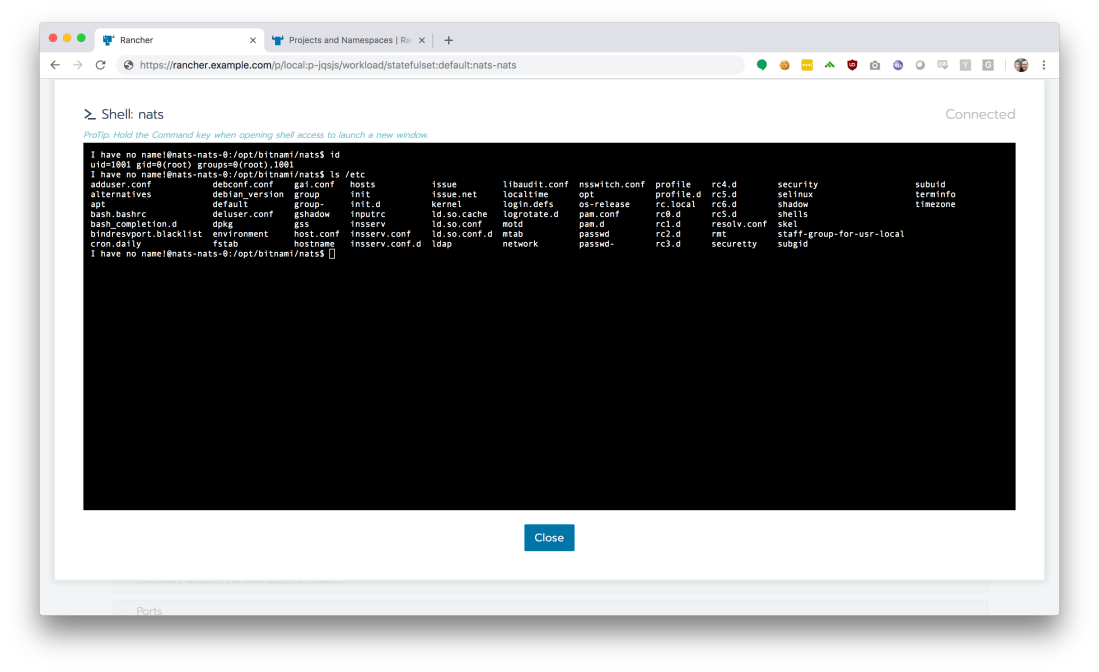

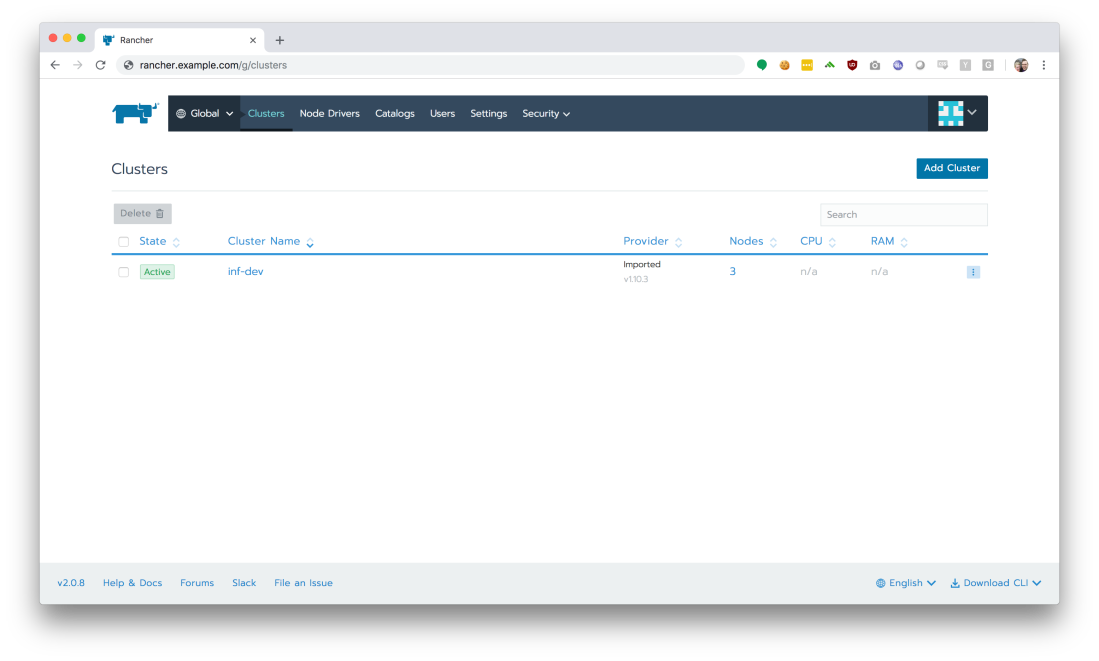

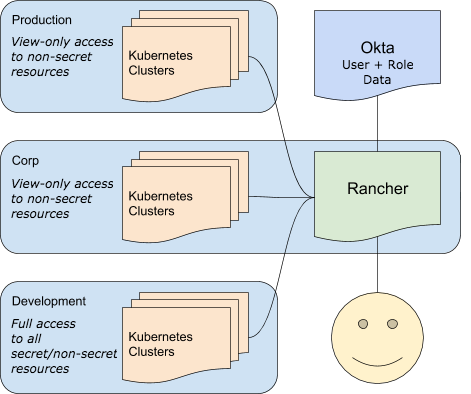

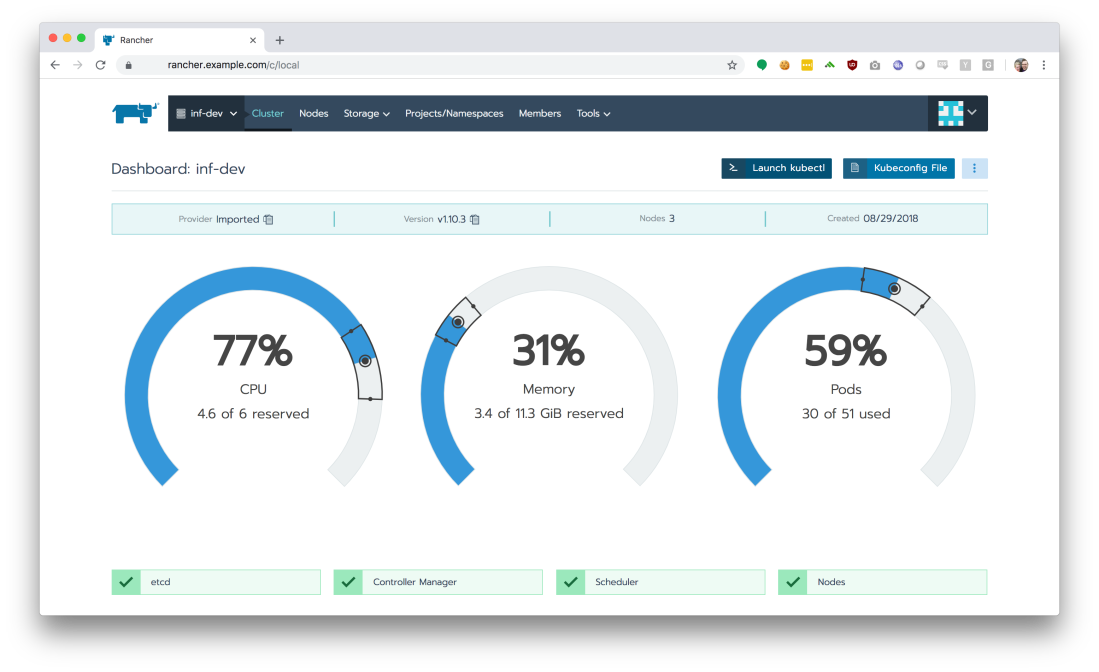

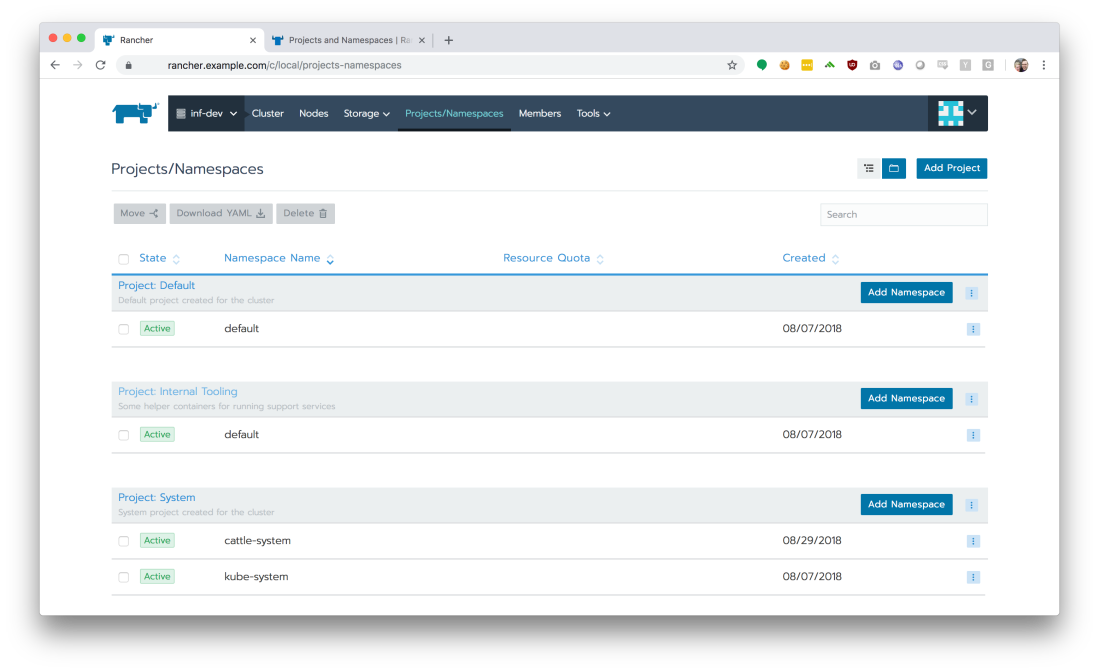

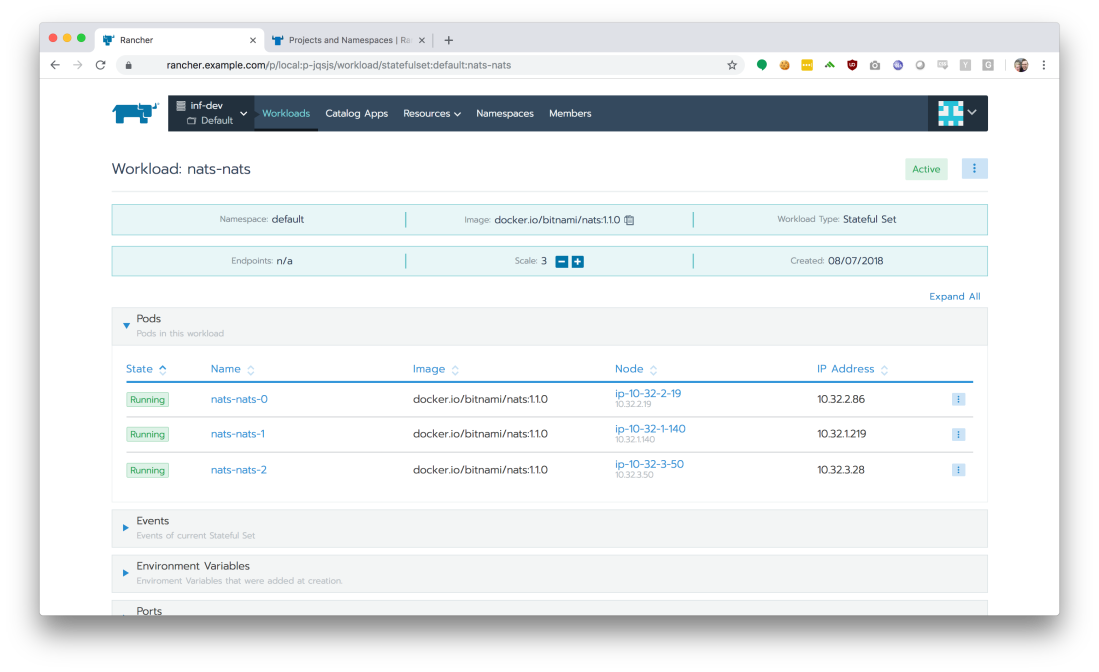

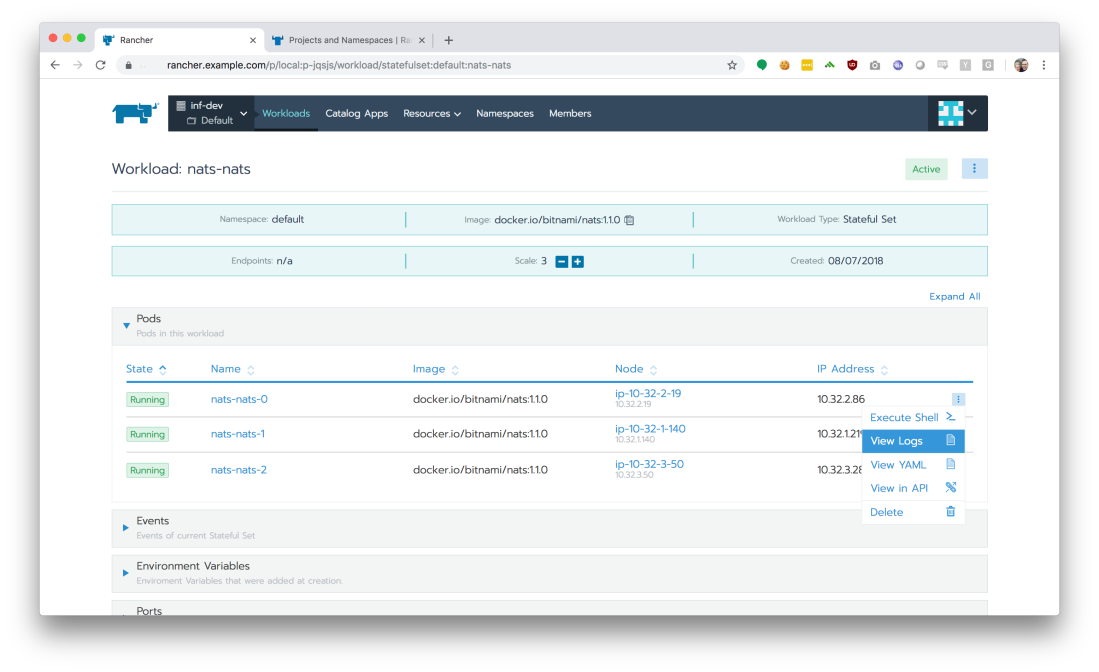

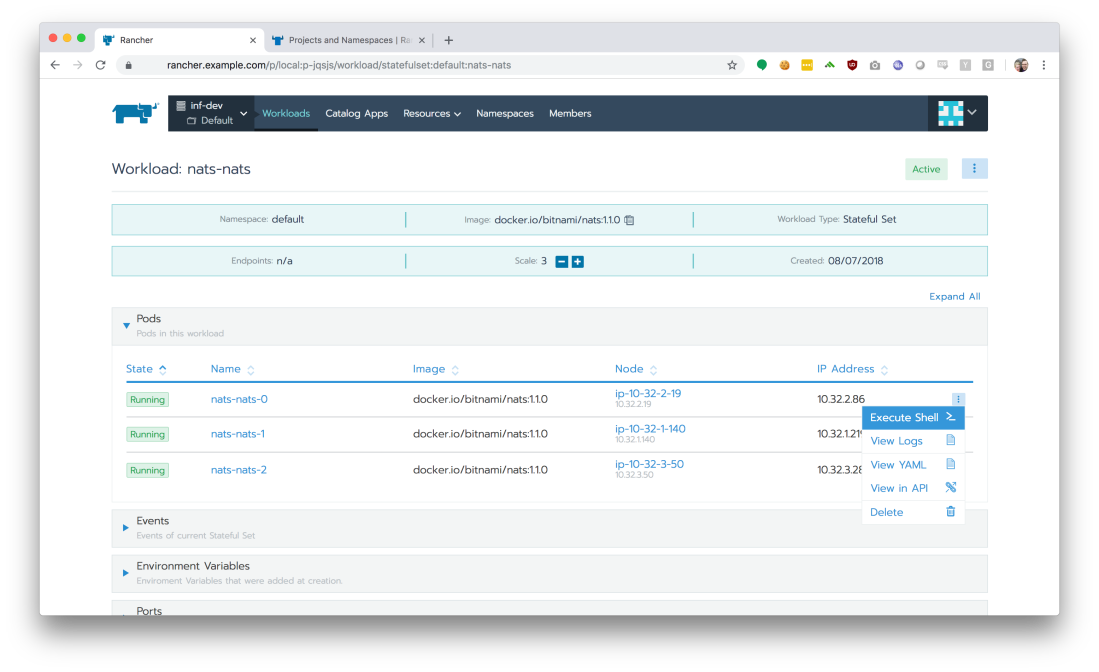

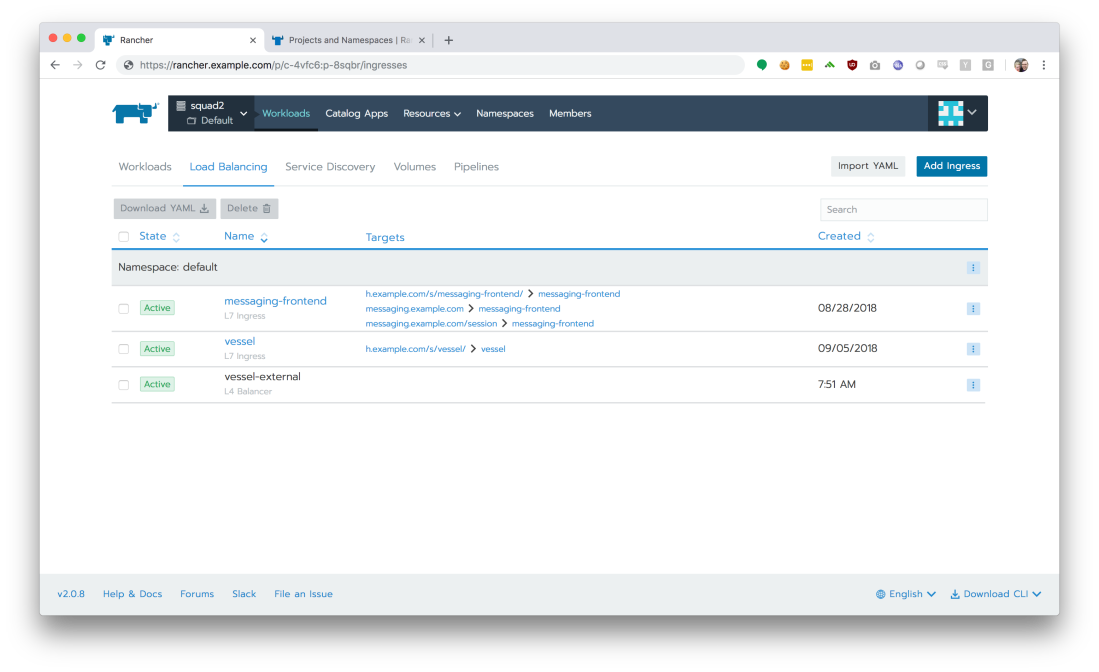

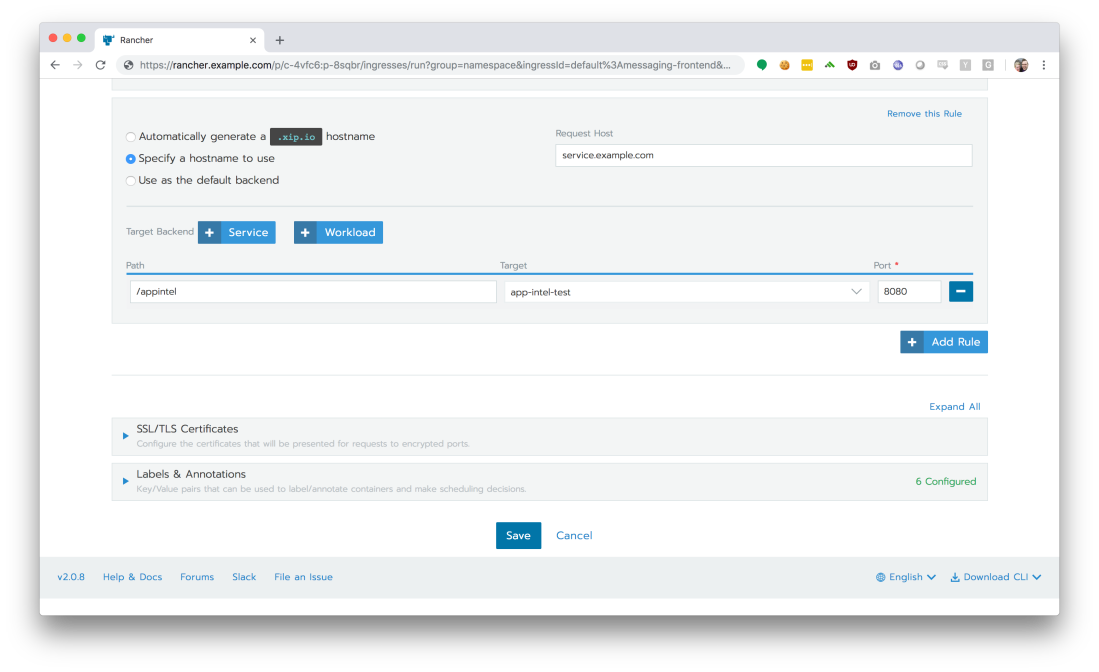

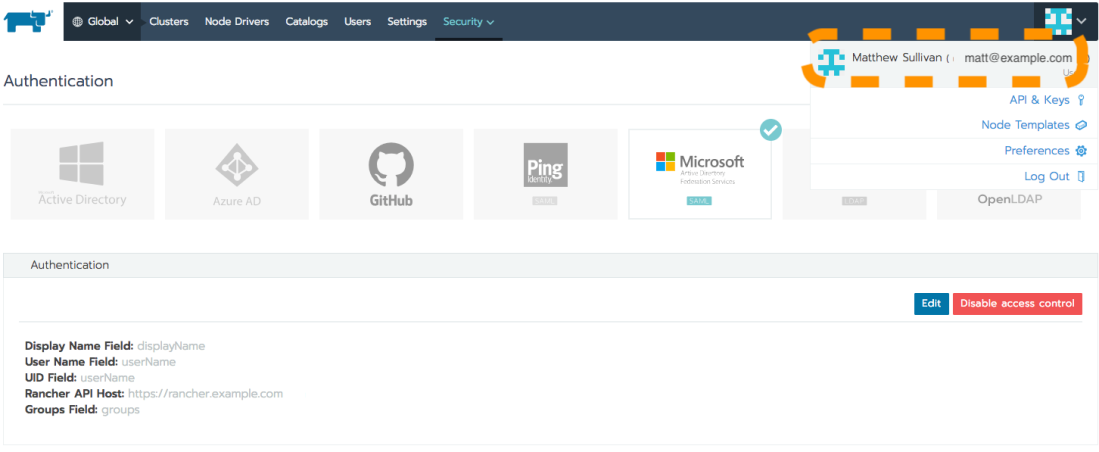

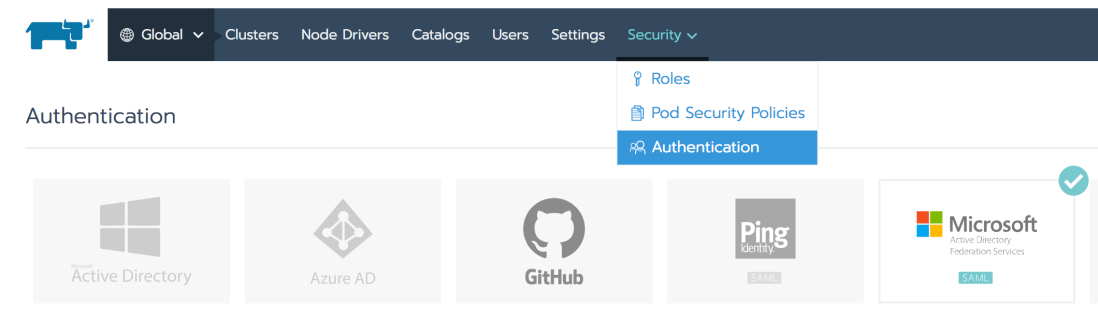

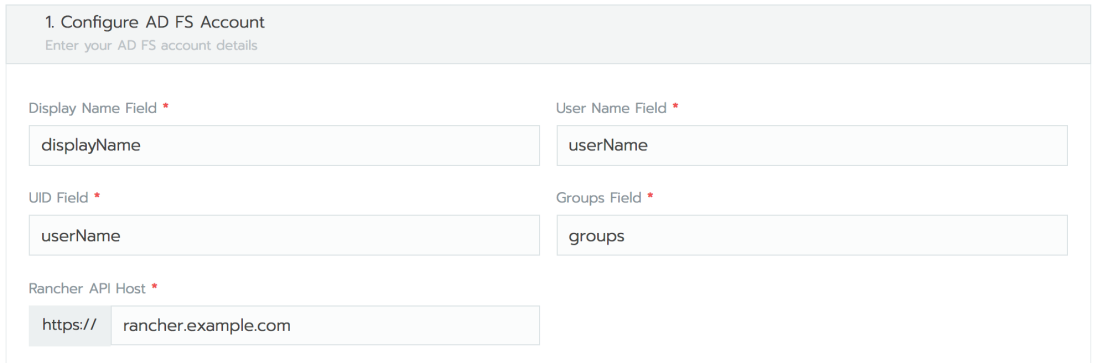

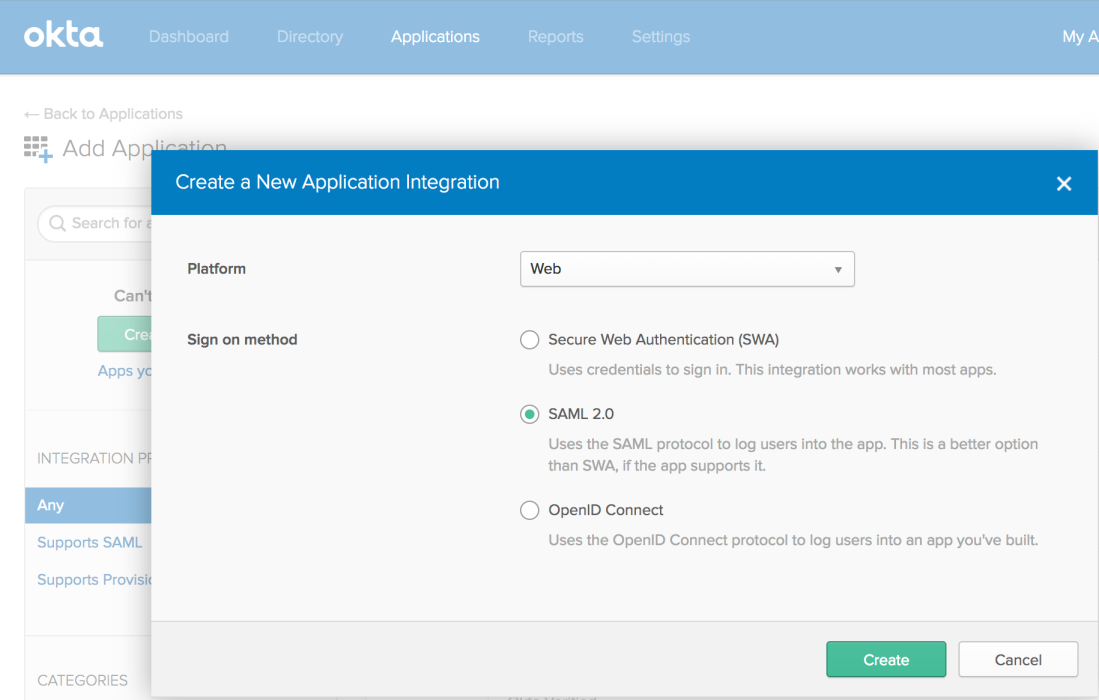

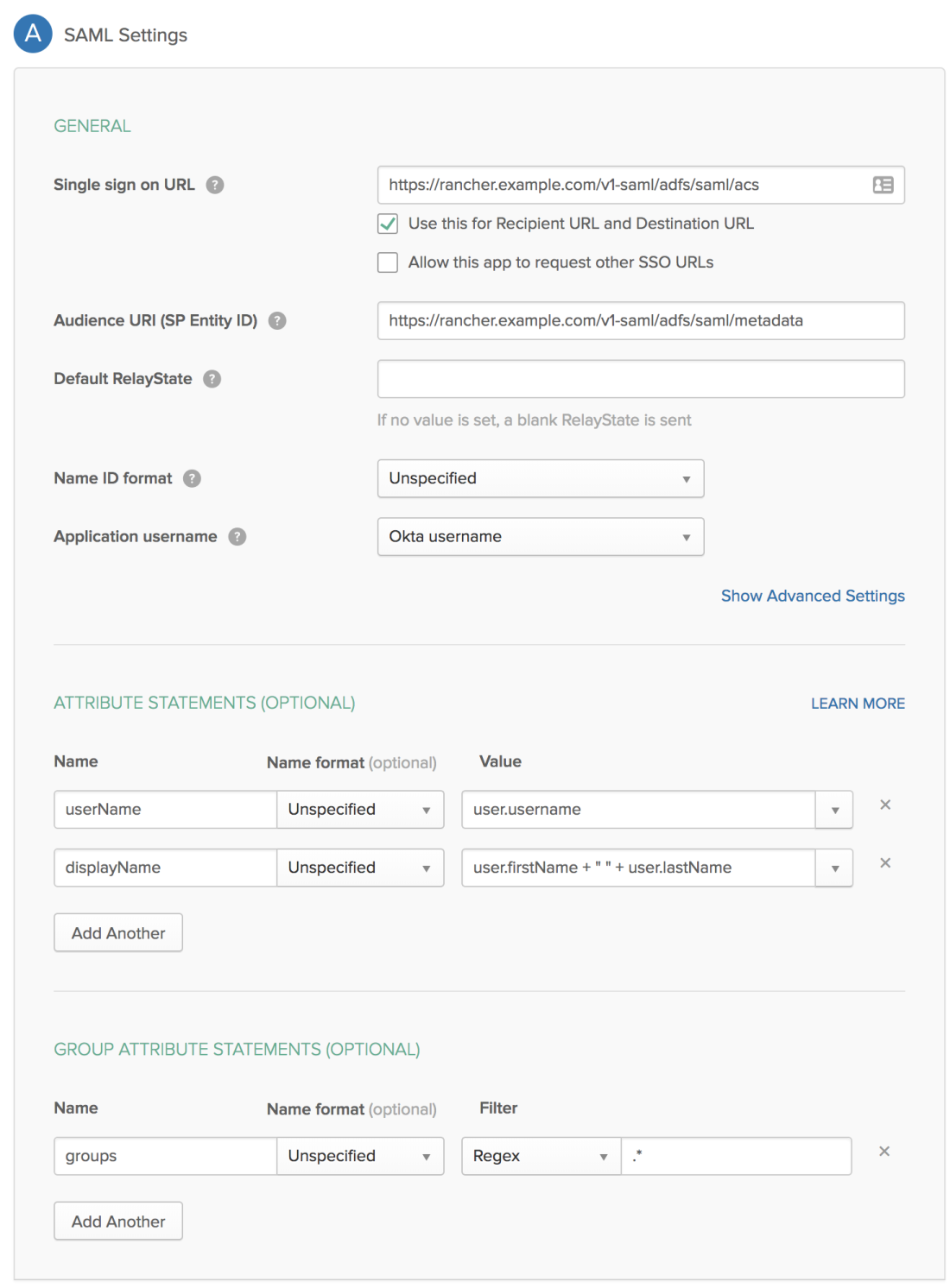

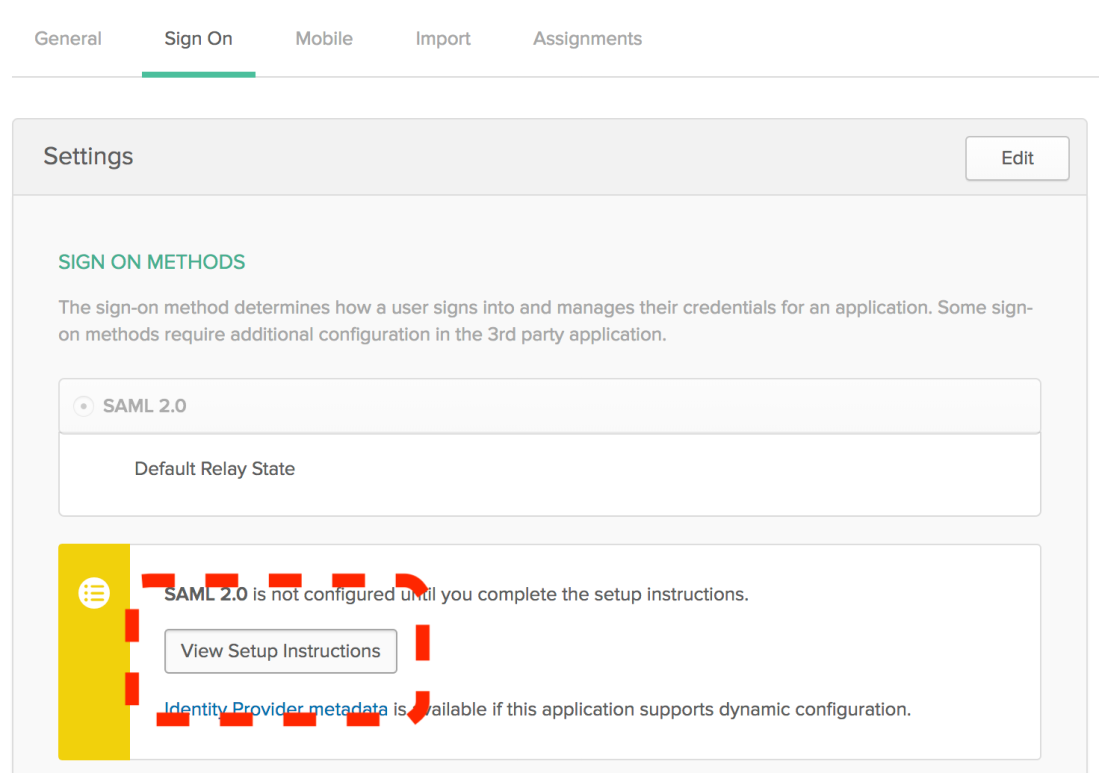

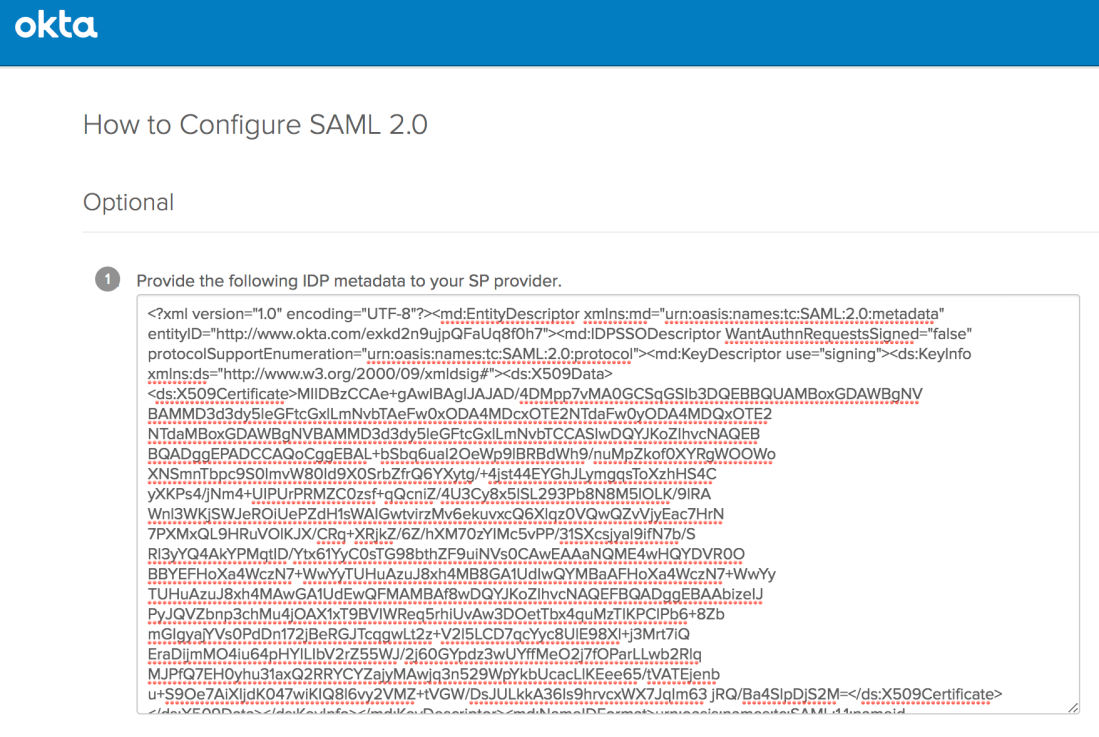

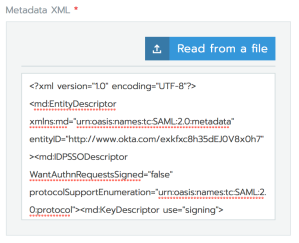

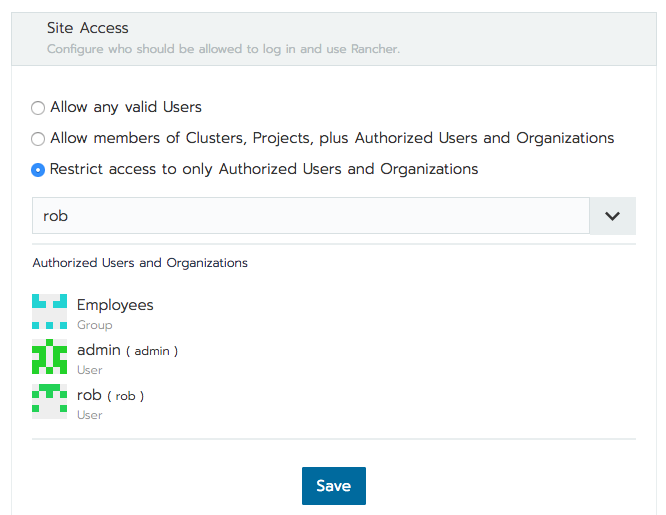

We use Rancher to help us manage our Kubernetes clusters. Rancher manages our Cattle. How cheeky.

Managing the herd

The rest of this blog post will be primarily dedicated to discussing how we build and manage our worker nodes. For many organizations (like ours), the option does not exist to simply use the defaults, as nice as that would be. A complex web of compliance frameworks, customer requirements, and best-practices means that we must adhere to a number of additional security-related controls that aren’t supplied out-of-the-box. These controls were largely designed for how IT worked over a decade ago, and it takes some work to meet all of these controls in the world of containers and cattle.

So how do we manage this? It’s actually fairy straightforward.

Building cattle

- Each node is built from code, specifically via Hashicorp Packer build scripts.

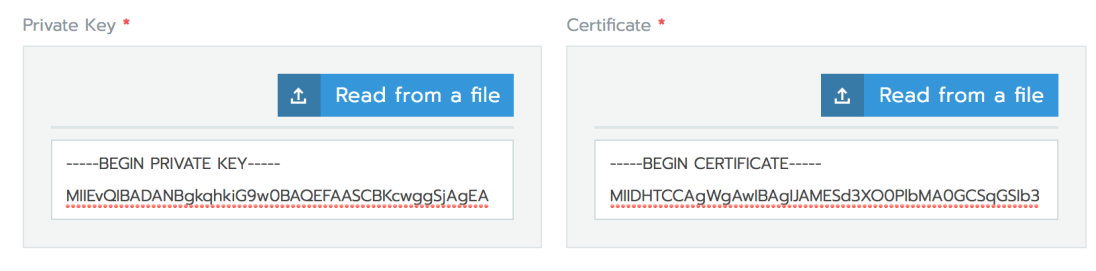

- Each node includes nothing but the bare minimum amount of software required to operate the node. We start with EKS’ public packer build plans (thanks AWS!) and add a vulnerability scanning agent, a couple monitoring agents, and our necessary authentication/authorization configuration files.

- For the base OS, we use Amazon Linux 2, but security hardened to the CIS Level 1 Server Benchmark for RedHat (because there is no benchmark for AL2 at this time). This took a bit of time and people-power, but we will be contributing it back to the community as open-source so everyone can benefit (it will be available here).

- This entire process happens through our Continuous Delivery pipeline, so we can build/deploy changes to this image in near real-time.

Running cattle

- At least weekly, we rebuild the base image using the steps above. Why at least weekly? This is the process by which we pick up all our OS-level security updates. For true emergencies (e.g. Heartbleed), we could get a new image built and fully released to production in under an hour.

- Deploy the base image to our dev/staging Kubernetes environments and let that bake/undergo automated testing for a pre-determined period of time.

- At the predetermined time, we simply switch the AWS autoscaling group settings to reference the new AMI, and then AWS removes the old instances from service.

Security, as code

My role requires me to regularly embarrass myself by being a part of customer audits, as well as being the primary technical point of contact for our FedRAMP program (which means working through a very thorough annual assessment). This concept of having ‘cattle’ is so foreign to most other Fortune 500 companies that I might as well claim we run our software from an alien spaceship. We lose most of them at the part where we don’t run workloads on Windows, and then it all really goes off the rails when we explain we run containers in production, and have for years. Despite the confused looks, I always go on to describe how this model is a huge bolster to the security of the platform. Let’s list some benefits:

Local changes are not persisted

Our servers live for about a week (and sometimes not even that long, thanks to autoscaling events). Back in my pentesting days, one of the most important ways for me to explore a network was to find a foothold and then embed solidly into a system. When the system might disappear at any given time, it’s a bit more challenging to set up shop without triggering alarms. In addition, all of our workloads are containerized, running under unprivileged user accounts, with only the bare minimum packages installed necessary to run a service. Even if you break into one of these containers, it’s (in theory) going to be extraordinarily difficult to move around the network. Barring any 0-days or horrific configuration oversights, it’s also next-to-impossible to compromise the node itself.

This lack of long-lived servers also helps bolster the compliance story. For example, if an administrator makes a change to a server that allows password-based user authentication, the unauthorized change will be thrown away upon the next deploy.

When everything is code, everything has an audit trail

Infrastructure as code is truly a modern marvel. When we can build entire cloud networks using some YAML and a few scripts, we can utilize the magic of git to store robust change history, maintain attribution, and even mark major configuration version milestones using the concept of release tags. We can track who made changes to configuration baselines, who reviewed those changes, and understand the dates/times those changes landed in production.

Now what about your logging standards? Your integrity checks? Your monitoring agent configurations? With pets, 1-5% of your servers are going to be missing at least one of these, either because of misconfiguration, or simply that it never got done in the first place. With cattle, the configurations will be present, every time, ensuring that you have your data when you need it.

Infrastructure is immutable, yet updated regularly

In the “old” way, where you own pets, handling OS updates is a fickle process. For Windows admins, this means either handling patches manually by logging in and running Windows Updates, or you run a WSUS server and test/push patches selectively, while dealing with the fact that WSUS breaks constantly. On Linux, getting the right patches installed typically means some poor sysadmin logging into the servers at midnight and spending the next 2 hours copy/pasting a string of upgrade calls to apt, or shelling out a decent amount of cash for some of the off-the-shelf solutions available from the OS vendors themselves. Regardless of the method, in most situations what actually happens is that everyone is confused, not everything is fully patched, and risk to the organization is widespread.

With cattle, we build our infrastructure, deploy it, and never touch it again. System packages are fully updated as part of the Packer build scripts (example), and no subsequent calls to update packages are made (*note: Amazon Linux 2 does check for and install security updates upon first boot, so in the event of a revert to a previously-deployed build, you still have your security patches, though at the cost of start-up time). What we end up with is an environment running on all the latest and greatest packages that is both reliable and safe. Most importantly, servers aren’t going to be accidentally missed during patching windows, ephemeral OS/networking issues won’t leave one or two servers occasionally unpatched, and no sysadmins have to try and get all the commands pasted into the window correctly at 1:13 in the morning.

Parting thoughts

No pride in tech debt

While I know this whole blog post comes across with a tone of, “look what we have made!”, please make no mistake: I consider this all to be tech debt. We have, and will continue to, push our vendors to bake in these features from day one. I know that my team’s time is better spent working on making our products better, not on making custom-built nodes that adhere to CIS benchmarks. When the day comes, we’ll gladly throw this work away and use a better tool, should one become available.

The cost of pets

Pets never seem that expensive if all you use to quantify their costs is the server bill at the end of the month. Be in tune with the human and emotional costs. Be mindful of the business risk.

- There’s a human cost associated with performing the midnight security updates.

- There’s a risk cost associated with running a production server that only one person can ‘touch’.

- There’s a massive risk cost associated with human error during planned (or unplanned) maintenance and one-offs.

- There’s a risk cost associated with failing to patch and properly control a fleet of pets.

Sometimes identifying pets and converting them to cattle is an unpleasant process, especially for the owners of those systems. Be communicative and understanding, and always offer to help during every step of the way.

That’s all

Thanks for sticking with me, I know this post was a long one. If you have any questions, thoughts, or comments, feel free to hit me up or comment below.