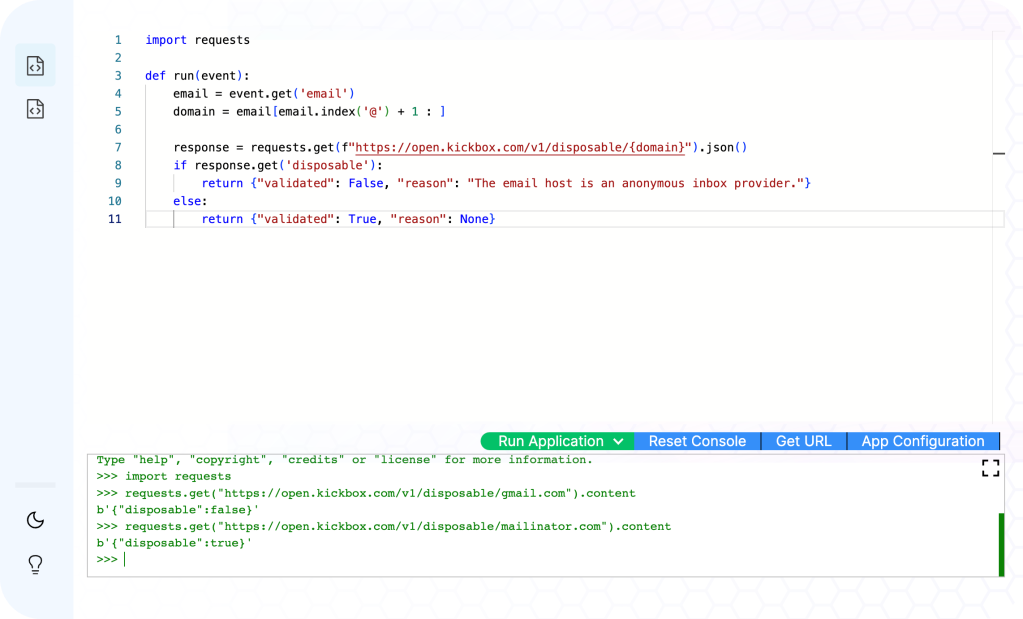

Integral Cloud is a cloud platform which helps knowledge workers write and run small Python applications. I learned a ton of new and interesting things while building this platform, and for the next few weeks, I’ll be documenting my learnings in this series. If you try it and have any feedback (good or bad), please reach out!

RCE as a Service

Running someone else’s code is not a great idea, but this feature is pretty much the core functionality of Integral Cloud.

Many products now exist to solve this challenge, and typically either use sandboxing (like vm2), running in a Docker container and praying there are no kernel 0days anytime soon, or running code on virtual machine that can be started really really quickly, such as with Firecracker.

Because Python is the language most knowledge workers have been exposed to1, that was my target language for MVP. Unfortunately, vm2 is for running JavaScript (and while Python can be compiled to wasm the performance isn’t great). Docker containers just don’t have the host-level separation assurances for running business-critical workloads with multi-tenancy. Firecracker is a great choice; very secure, lightning fast.

If Firecracker good enough for AWS to run Fargate and Lambda on top of, it’s good enough for me. Now, let’s figure out how I’m going to run it…

Me, in about September 2022

About an hour into that process and 16 tabs deep, my brain finally connected a few important neurons, and I had the sudden realization that the entire compute layer for this product could just be built right on top of AWS Lambda. Very secure. Very cheap. Very scalable.

Run that code!

We can fast-forward through all the boring development stuff for a second. I wrote a backend. I wrote S3 triggers to do AWS Lambda deploys. I wrote a Lambda to package other Lambdas. I wrote dependency installers.

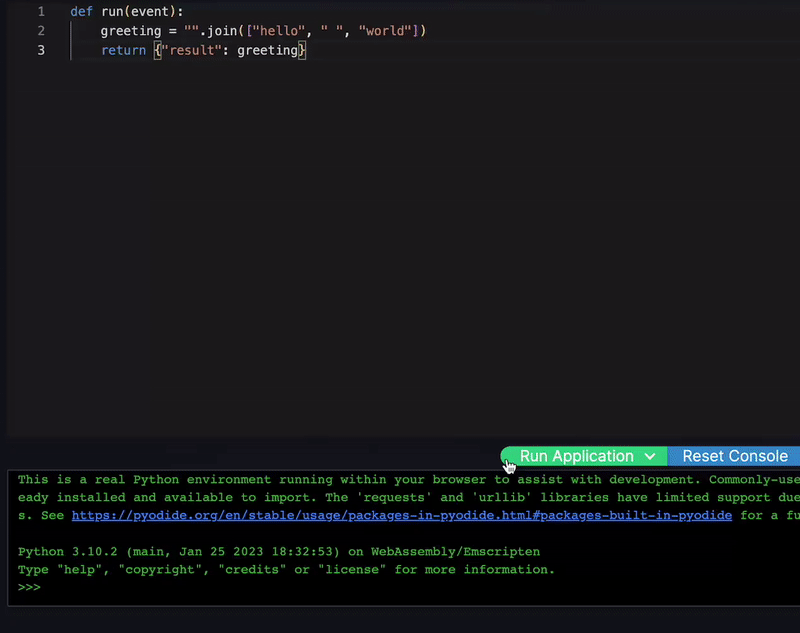

Tada! You can write some code, hit save, and around 10-15 seconds later, you can run it.

I’m feeling pretty proud until I actually try to use it, and the experience is awful. Small typo? 15 second delay. Want to test some new functionality you are super excited about? Awesome… after a 15 second delay. Iterating in this environment is, frankly, terrible.

Clearly I need to figure out how to deliver fresh code to an AWS Lambda deployment without rebuilding it.

Delivering fresh code to an AWS Lambda deployment without rebuilding it

AWS Lambda functions will remain warm for somewhere between 15 minutes to over an hour, depending on their configuration, region, and other factors. Harnessing this “warmth” would certainly lead to a better user experience, and could reduce our time required to update apps from 15 seconds or so down to less than 1 second. I just needed to figure out how to get the code where it needed to go.

Users’ code is already stored at rest in S3, and simply synchronizing the latest copy over to the Lambda crossed my mind. However, I also wanted to support running user applications without the user having actually saved their changed files (after all, maybe the user wants to test an idea real quick). For that reason, S3 was deemed not my best option.

Invocations can have payloads either 256 KB or 6 MB in size, depending on how you intend to invoke the function. In Integral Cloud’s case, I am doing these runs asynchronously, which puts us in the 256 KB limit.

256 KB doesn’t seem like much in 2023, but most Python source files are probably 0.1% of that size. I have plenty of headroom to bundle up recently-changed source files and send them long in the Event with the call to start the Lambda.

{

"action": "execute",

"updated_content":[

{

"filename":"app.py",

"content":"def run(event): [...]",

"timestamp":1681445727601

}

]

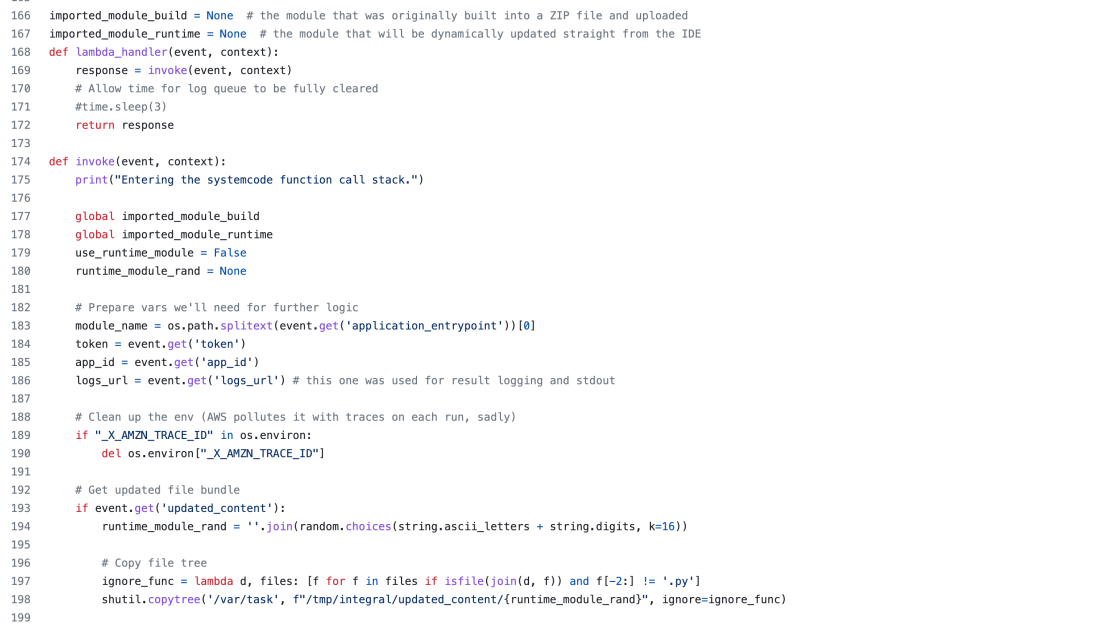

}Now let’s head into the AWS Lambda itself and see how we handle this data:

if event.get('updated_content'):

# Only /tmp is writable on an AWS Lambda.

tmp_root = "/tmp/integral/updated_content/"

# Copy all Python files from the original bundle,

# located at /var/task, to our temporary folder location.

ignore_func = lambda d, files: [f for f in files if isfile(join(d, f)) and f[-2:] != '.py']

shutil.copytree('/var/task', f"{tmp_root}", ignore=ignore_func)

# Write out the updated content on top of this file tree.

for item in event.get('updated_content'):

with open(f"{tmp_root}/{item.get('filename')}", 'w') as f:

f.write(item.get('content'))

# Reload the module.

# This part has a ton of error handling and log redirection, but for brevity:

try:

importlib.reload("app")

except:

passWhat the 3 months ago version of me didn’t know, though, is that importlib.reload isn’t all it’s cracked up to be. A careful look through the documentation yields a lot of disclaimers, and in testing, I encountered fatal errors on a regular basis.

For example, I found I was unable to reload a module that had already imported the requests library (doing this appeared to hose urllib3 somewhere down the line). I would encounter other oddities with third-party and built-in modules as well, with no clear idea of how I could resolve them.

Because these are typically very small Python apps, and because I’m in an environment where out-of-memory issues are handled relatively gracefully, I decided the next best course of action was to simply perform fresh imports of the user’s codebase on each invocation:

if event.get('updated_content'):

# Create a random string to name our new module.

module_name = ''.join(random.choices(string.ascii_letters + string.digits, k=16))

# Only /tmp is writable on an AWS Lambda.

tmp_root = "/tmp/integral/updated_content/"

# Copy all Python files from the original bundle,

# located at /var/task, to our temporary folder location.

ignore_func = lambda d, files: [f for f in files if isfile(join(d, f)) and f[-2:] != '.py']

# Update the copytree call to put the copy in a new subfolder.

# E.g. /tmp/integral/updated_content/XCewGt7rO8OybtvZ

shutil.copytree('/var/task', f"{tmp_root}{module_name}", ignore=ignore_func)

# Write out the updated content on top of this file tree.

for item in event.get('updated_content'):

with open(f"{tmp_root}{module_name}/{item.get('filename')}", 'w') as f:

f.write(item.get('content'))

# Reload the module.

# This part has a ton of error handling and log redirection, but for brevity:

try:

importlib.reload("app")

except:

pass

# Now we can freshly import the module and run the user's application.

module_name_import = f"updated_content.{module_name}.app"

user_app = importlib.import_module(module_name_import)

user_app.run(event)This has proven to be extremely reliable, and is lightning fast. Timing information indicates that we can copy the file tree, layer over the values from the Event payload, and import the new module, all within about 1 second or less.

A festival of design considerations

The example code above is heavily simplified for readability. In reality, Integral Cloud also has to wrap significant amounts of error handling around the attempted imports (to guard against syntax errors), and handle redirection of both stdout and stderr away from AWS Lambda’s handler for these concepts (CloudWatch Logs), in favor of our own log collection, retention, and display capability. How Integral Cloud handles output redirection in AWS Lambda is likely the next post in this series, so stay tuned for that.

There is also plenty of logic in place to help determine when we should utilize these dynamically-imported portions of code. When working in the embedded IDE, we want to reflect a user’s changes instantly. When invoked outside of the IDE experience, though, we want to use the code that has been saved to S3, built (and had library dependencies installed), and deployed. For the moment, Integral Cloud is handling both of those cases in the same AWS Lambda deployment, seamlessly.

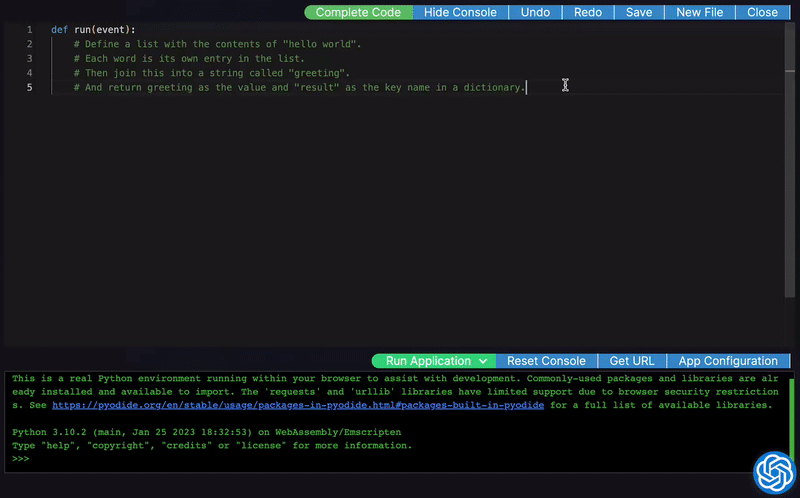

Finally, Integral Cloud also offers a full in-browser Python REPL (powered by Pyodide), in which users’ apps can also be very quickly tested. At the time of writing, I am still using importlib.reload on the in-browser environment, but the UI offers a “Reset Console” button which restores the environment to pristine condition. I may revisit this decision in the future, depending on what the feedback is from users. Many users probably won’t have 16GB MacBook Pros on their desks, so memory management is likely to be much more important for the in-browser experience than it is for the AWS Lambda deployment environment.

More to come!

As I mentioned at the top of the post, this is the first in what will hopefully be a series of posts sharing what I’ve learned throughout the process of building Integral Cloud. I love to build and I love to write, so it’s been a lot of fun to take things this far. Thanks for reading!

Footnote

1. Python is now the language of choice for U.S. universities, and is taught as part of the standard curriculum in a number of programs, including engineering, liberal arts, and business disciplines.