Views expressed within this post are entirely my own, and may not reflect the views of my employer, their leadership, or their security staff.

One of the cooler things about how we run infrastructure at my company is our remote access story. It’s basically super super secure magic. I’ve talked to a lot of my security architect peers and auditors in the industry, and as far as I can tell, I think we kind of accidentally invented an innovative way of doing things, through a mixture of commercial solutions and homegrown software. I thought it would be fun to do a technical deep-dive on how the industry operates legacy remote access solutions, versus how we now implement remote access today.

Friends don’t let friends use VPNs

I’m going to start strong with a hot take:

All VPNs are garbage.

VPNs, like all things in computing, can be carefully configured such that if they get hacked, the world doesn’t end. Nobody actually does… but theoretically they could!

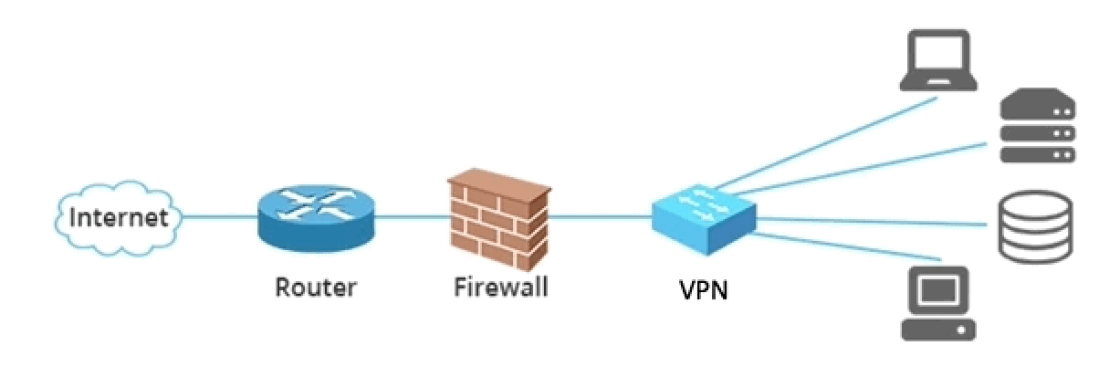

In 99.95% of cases, VPNs are set up to:

- Bridge a network device – such as a laptop or even another server

- … into a larger network of servers – such as in the cloud or on-prem

- … across the Internet – protected with an additional layer of encryption

This is not a great idea. What if your laptop has malware on it and you VPN into a production network? Tada, you’ve just granted malware local network-level access to your production infrastructure! What do you win? Sadness. Lots of sadness.

Okay, so the malware thing might be a bit contrived. What about a hacker compromising the VPN itself, perhaps through a vulnerability within the VPN device or software, in order to escalate directly into the target network unchecked? Now that’s the ticket, and it’s far from theoretical. For details, feel free to read this write-up about how the Heartbleed vulnerability was used to hijack VPN access, through an attack vector I warned about right here in this blog.

We’ve seen a rash of recent VPN vulnerability announcements, and these are being immediately utilized by threat actors around the globe to gain access to target networks. It makes sense though, right? These systems are Internet-facing, with no other protection mechanisms in front of them. Patching is typically not automatic, and involves proprietary update mechanisms managed by proprietary software running on a proprietary OS. Good luck securing that.

Are these VPN devices hard to find? Before writing this blog post, I’d never gone searching, so I didn’t know for sure. I spent about 30 minutes combing Shodan.io and here are a few of the high-profile results that came back:

- Thomson Reuters – a $41 billion dollar company with 26,000 employees, which gets half of its revenue from financial services

- SAP Concur – hacking travel and expense management service SAP Concur would allow us to see all sorts of great PII and payment information

- Progressive Insurance – PII and PHI, with some payment info in the mix

- Chevron Phillips Chemical – I think this one speaks for itself

Well, that’s probably not good. If these things are so trivial to find, it seems non-ideal to expose them to Internet. Do we have any other choice?

Zero Trust

Zero Trust basically means that you authorize every connection, versus assuming that something is trustworthy because it’s already inside of your network. If you want a better high-level understanding of this term and shift in thinking, read this Network World article (apologies for yet another shameless self-promotion).

To facilitate Zero-Trust logins to production servers, we purchased Okta’s solution in this space, “Okta Advanced Server Access” (OASA). The OASA solution is awesome for three reasons:

1. It’s just a super-powered configuration wrapper around OpenSSH

Under the hood, the OASA platform is a well-managed deployment of OpenSSH (i.e. the ssh command on your computer). OpenSSH is an extremely well-tested and secure solution for remote administration, and hasn’t had a vulnerability that could lead to unauthorized remote access* (in its default configuration) since 2003.

The network entry points themselves are simple single-function Amazon Linux 2-based EC2 instances, meaning the attack surface is extraordinarily small. Remember: one of the largest issues with VPN appliances is the proprietary software / OS configurations which preclude automatic patching; being able to patch our network entry points along with the rest of our infrastructure is a big win.

2. No network bridging

If you recall from above, most VPNs are configured to bridge a network device, such as a laptop, into a larger network of servers across the Internet. One of my biggest pet peeves about VPNs is that they hijack all your network traffic. They can be configured not to, but our customers and security controls like NIST 800-53 SC-7(7) typically require that they do.

This is a good example where security controls have fallen way behind where the industry is actually at. In the old-school world, that VPN might be the only thing encrypting your traffic. The auditors sometimes think that without the protection of the VPN, you might deliver your secret sauce via unencrypted channels instead. So that’s how you end up running your end-user’s Slack traffic through your production VPC.

But there’s a better way, thankfully. In the OASA model, connectivity is individually brokered between you and the server. For example, requesting “I want to be on EC2 instance i-028d62efa6f0b36b5” causes your system to hop to a network entry point, and then hop again to the destination server. OASA also protects these hops by issuing client certificates with 10-minute expirations after first verifying your identity through our single sign-on provider, and then also verifying you are on a pre-enrolled (and approved) trusted company device.

There’s not a lot of freedom to just go wandering around. An administrator can log in to a network entry point and then port forward to another destination if they want to, but that has to be explicitly requested when the connection is set up, and the feature is off by default. Best of all, by not calling this solution a VPN, nobody requires me to route all our traffic out through the production VPCs.

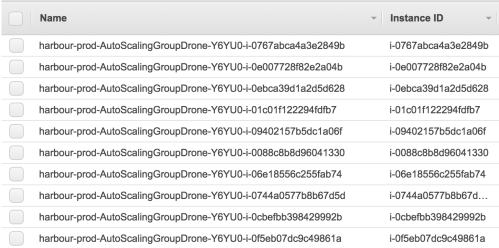

3. Scoped network access and random IPs

These network entry points are deployed on a per-VPC basis (e.g. one for prod, one for staging, one for dev, etc). Additionally, each is very closely monitored by our host protection solution, which logs all activity and filters traffic. Should an attacker find themselves on one of these network entry points, there’s also not really much they can do. In all cases, our security model does not permit access to protected resources simply because you are already within the VPC.

One of my favorite protection mechanisms was discovered completely by accident. When initially setting up the network entry points, each was configured to have a static IP address from AWS. We quite quickly discovered that these IP addresses would sometimes not get attached to the EC2 instance in a timely manner, which would cause OASA to not configure itself correctly. After trying what felt like 10 different fixes in production, I eventually got pissed off and just removed the static IP stuff entirely – and then it totally worked.

OASA just needs an Internet-facing IP, that’s it. It doesn’t have to be previously known or anything. When your client is ready to make a connection, under the hood it’s actually requesting the hop’s unique GUID and then resolving the IP from that:

- User: “I want to log in to the hop for

vpc-99f2acff“ - OASA Client App: “I resolved the hop

vpc-99f2acffto a known server with the GUID25af5d4f-e657-4583-b0bd-beb1ca4f0c1f“ - OASA Server: “

25af5d4f-e657-4583-b0bd-beb1ca4f0c1fcan be reached at3.22.198.24, here are the requisite certificates.” - OASA Client App: “Placed certificates, dialing

3.22.198.24via SSH…”

This means that every deploy of our network entry point infrastructure (its own separate post that you may enjoy) comes with a brand-new set of IP addresses. That means for any given network entry point, a random attacker has a few tens-of-millions (and rising every day) IPs to sift through. Sadly for them, such a search is futile thanks to…

Enterprise Port Knocking

Port knocking is something nobody actually uses in the real world, but is a lot of fun to set up. In short, port knocking is a sequence of hits to various closed network ports, and if you get that sequence right, the “real” port opens up for use to your IP. It’s neat, but impractical in an actual enterprise.

I was inspired by the idea of port knocking, and thought about how we might be able to iterate on the concept. Thus was commissioned a solution I call Enterprise Port Knocking.

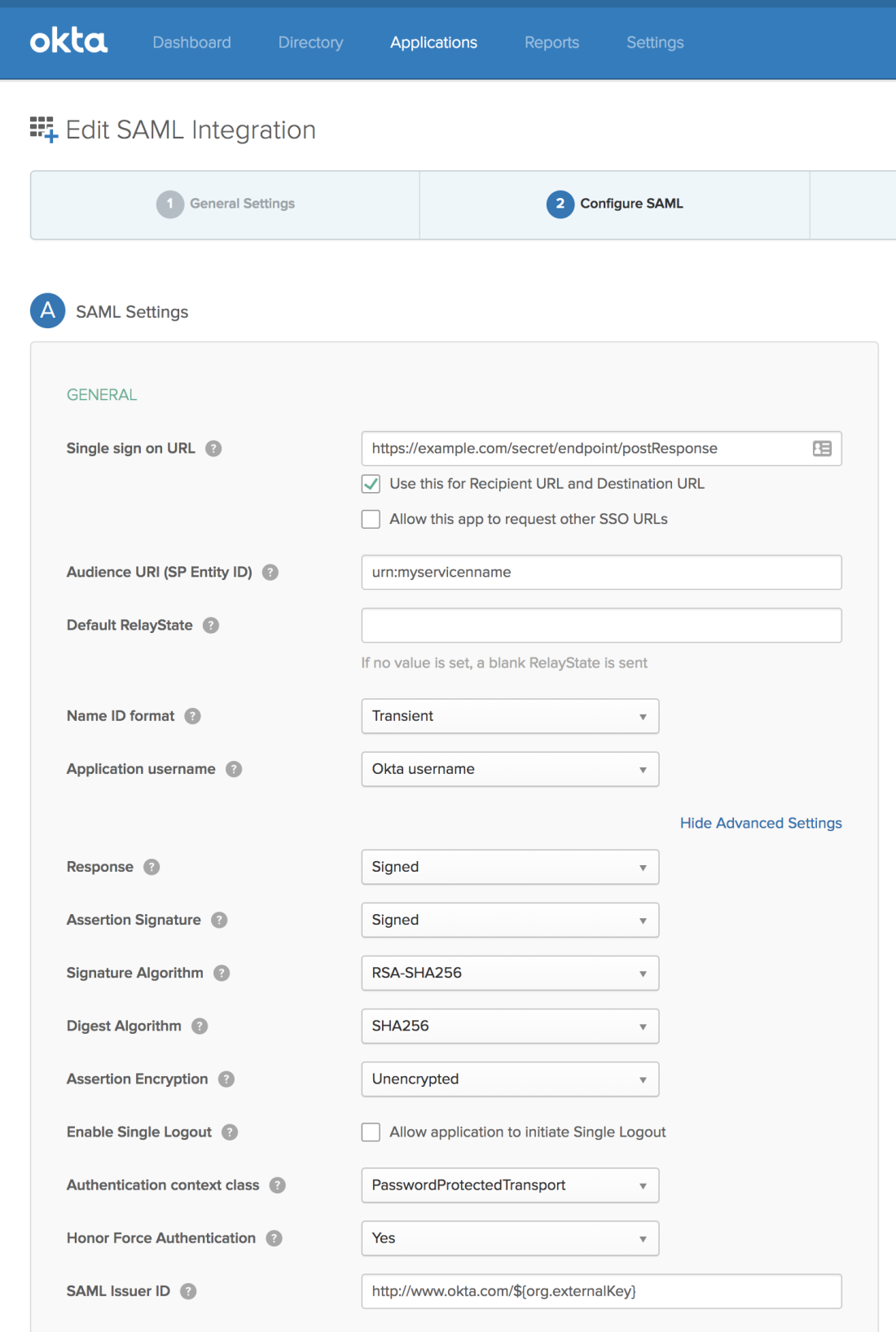

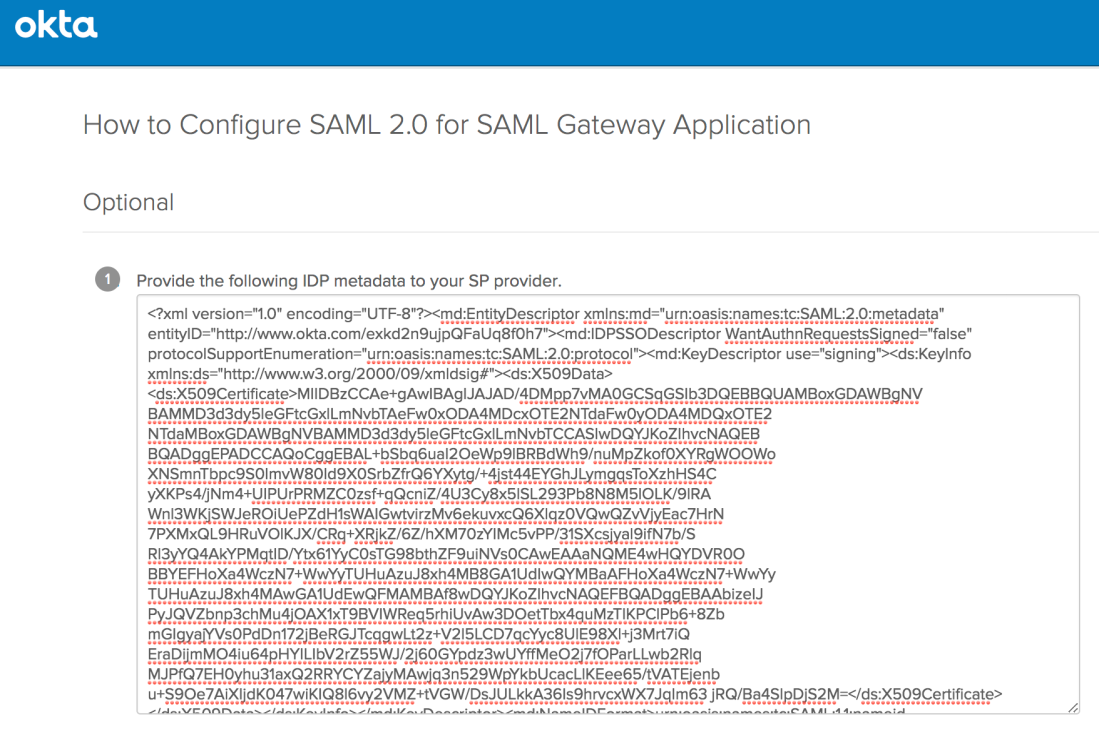

I wanted to create a mechanism that would ensure our network entry points would remain firewalled off from the Internet until someone needed to access it. That mechanism needed to be easy to use, reliable, and authenticate through our existing identity provider.

I drew up the rudimentary architecture of this mechanism and then ran over to our extraordinarily talented engineering team. Within a couple of weeks, we were in production.

The service is pretty straightforward, and is deployed as an AWS Lambda function accessed through AWS API Gateway (the joys of serverless architecture!) for simple and reliable use. Operating the mechanism is easy:

- User successfully authenticates via single sign-on

- App traverses configured AWS accounts, looking for a specially-tagged Security Group (AWS’ concept of firewall rules)

- App updates the Security Group to allow requestor’s IP address. The Security Group rule has a tag with its creation time.

- A cleanup cron runs regularly to remove previously-allowed IPs after a configurable amount of time

Thanks to this service, we now boast a remote access solution which is entirely closed off from the Internet, requiring two-factor authentication via our user directory before even opening the firewall port.

Oh, and it’s easy too!

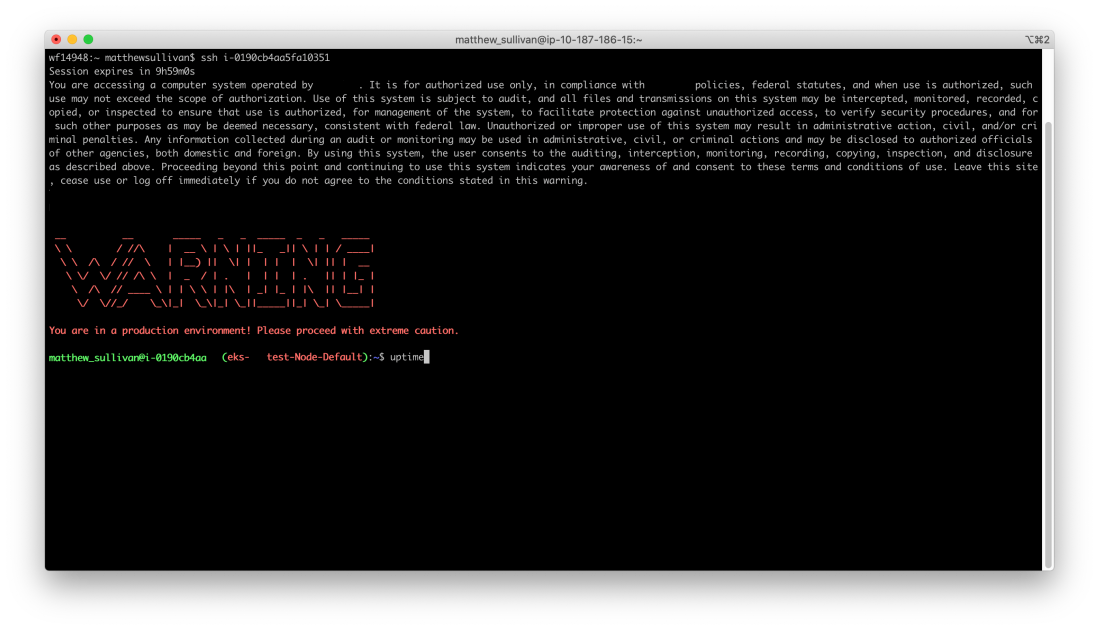

One thing I didn’t touch on was how easy these mechanisms are to use. I know it’s a lot of pieces, but when put together the login flow is quite simple:

- Log in to single sign-on, if not already

- Click the Enterprise Port Knocking connector in the SSO portal

- In your terminal, use the

SSHcommand and state your destination as the desired EC2 instance’s ID. OASA is smart enough to figure out which network entry point to use and the rest is entirely automatic!

This system has been a big win for our infrastructure staff, for our compliance program, and for the security of our customers. Users love how easy it is to access our servers without needing to authenticate yet again or remember which VPN to use. Meanwhile, I love how much better I sleep at night 😴. With our new model, everybody wins!

Well, everybody but the hackers.